Below are the functions in this plugin:

sshCommand: Executes the given command on a remote node.sshScript: Executes the given shell script on a remote node.sshGet: Gets a file/directory from the remote node to current workspace.sshPut: Puts a file/directory from the current workspace to remote node.sshRemove: Removes a file/directory from the remote node.

step 1. Install the plugin into your Jenkins.

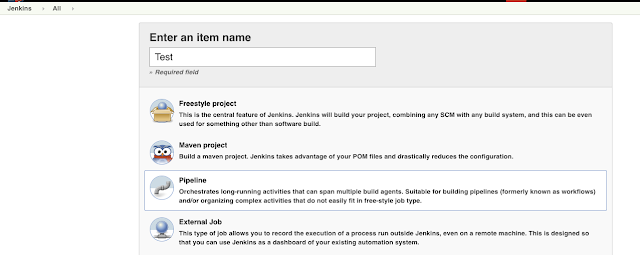

step 2. Create a new pipeline job.

step 3. Choose definition as Pipeline script, where we can test scripts with this plugin

step 4. create pipeline script

node {

stage('test plugin') {

}

}

step 5. to use the plugin functions, we need to create a remote variable first. Let's create the remote variable.

node {

def remote = [:]

remote.name = 'testPlugin'

remote.host = 'remoteMachineName'

remote.user = 'loginuser'

remote.password = 'loginpassword'

stage('test plugin') {

}

}

The remote variable is a key/value map, it stores the information that the functions will use.

step6. call the remote function.

node {

def remote = [:]

remote.name = 'testPlugin'

remote.host = 'remoteMachineName'

remote.user = 'loginuser'

remote.password = 'loginpassword'

stage('test plugin') {

sshPut remote: remote, from: 'test.txt', into: '.'

sshCommand remote: remote, command: "ls"

}

}

In this sample, we first upload the test.txt file from jenkins machine to the remote machine at /home/loginuser, and then we run the shell command ls to see whether or not the file exists.

This is a basic sample for using the SSH Pipeline Steps, for further information, please refer their git.

Little Tips: because the plugin command will close the sessions and disconnect from the machine, it will automatically end the running of the process it initialized. To make the thread keep running during the whole pipleline lifecycle, you will need to use "nohup" command. Next chapter, I will talk about how 'nohup' command works.